| Channel | Publish Date | Thumbnail & View Count | Download Video |

|---|---|---|---|

| | Publish Date not found |  0 Views |

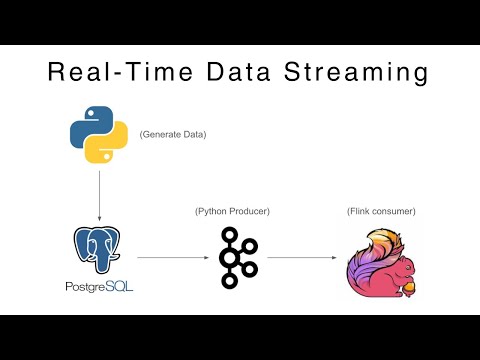

In this comprehensive tutorial, you'll join us on the journey to create a robust real-time data streaming pipeline from scratch! We dive deep into the world of Apache Kafka for efficient event recording, Apache Flink for powerful stream processing, and Postgres for storing and analyzing real-time insights.

What you learn:

Set up Apache Kafka for event streaming.

Building Apache Flink jobs for real-time data processing.

Integration of Flink with Kafka for seamless data flow.

Designing a schema and connecting Postgres as a data sink.

Optimizing the pipeline for scalability and performance.

Why real-time data streaming is important:

Real-time data processing is a game changer for industries looking for instant insights from their data. Whether you work in finance, e-commerce, or IoT, understanding how to design a robust streaming pipeline is a valuable skill in today's data-driven world.

Who should watch:

Data engineers

Software developers

Data scientists

Anyone interested in real-time data processing

Timestamps:

00:00 – Pipeline architecture

06:50 – Set up Docker Compose file

12:50 – Set up Postgres DB

4:00 PM – Set up Generate Data Python service

22:35 – Set up Python Kafka Producer service

29:10 – Set up Flink Kafka Consumer Service

51:20 – End-to-end testing

Requirements:

Basic knowledge of the concepts of Kafka, Flink and Postgres.

Knowledge of Java and SQL is a plus.

Remark:

This tutorial assumes a working knowledge of the basics of Apache Kafka, Apache Flink, and Postgres. If you're new to these technologies, check out our beginner-friendly guides listed in the description.

Code and resources:

https://www.buymeacoffee.com/bytecoach/e/213878

Don't forget to like, subscribe and hit the bell icon to stay up to date with our latest tutorials! Let's embark on this exciting journey into the realm of real-time data streaming together!

#ApacheKafka #ApacheFlink #Postgres #RealTimeData #DataStreaming #datapipeline

LIKE SHARE SUBSCRIBE FOR MORE VIDEOS LIKE THIS

—————————————— —– —————————————- —–

Hello people! I am a software engineer with a passion for teaching.

Please follow me and show your support so I can continue bringing this kind of content.

YouTube: https://www.youtube.com/channel/UCpmw7QtwoHXV05D3NUWZ3oQ

Instagram: https://www.instagram.com/code_with_kavit/

Github: https://github.com/Kavit900

Discord: https://discord.gg/hQSNN3XeNz

THANKS FOR LOOKING!

Please take the opportunity to connect and share this video with your friends and family if you find it helpful.